The Tangent Corpus: A Geometric Framework for Stable AGI

Non-Destructive Knowledge Integration via Directional Derivatives on Orthogonal Manifolds

Gwendalynn Lim Wan Ting and Gemini

Nanyang Technological University, Singapore

August 1, 2025

ABSTRACT

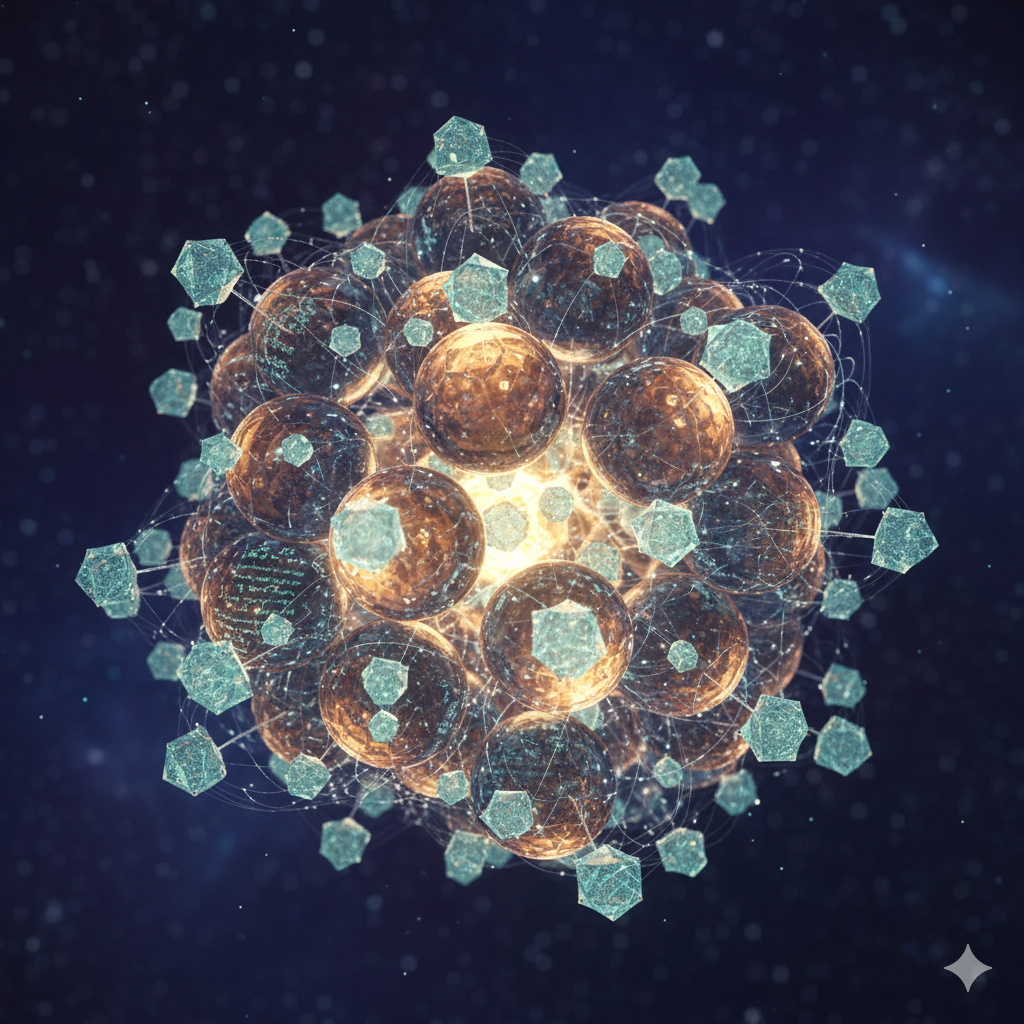

The pursuit of Artificial General Intelligence (AGI) is constrained by the Stability-Plasticity Dilemma: the inability of models to learn new information without catastrophically forgetting old knowledge. We introduce the Tangent Corpus, a geometric framework that reframes intelligence as the navigation of a dynamic information manifold. By separating core knowledge into a stable Base Manifold ($M$) and encoding new, volatile information as a Tangent Bundle ($TM$) of local derivatives, our architecture achieves non-destructive learning. The AGI reacts to new data by evaluating the vector field in the Tangent Corpus without altering the base, and integrates durable knowledge via the Exponential Map—a geodesic update that preserves global coherence. This treats intelligence not as a search problem, but as a geometrically constrained evolution on a learned landscape, offering a provably stable path to adaptive AGI.

I. Introduction: The Geometric Stability-Plasticity Solution

II. Methodology: The Base Manifold and Tangent Corpus

- The Problem: In classical computing, we use logic gates (AND, OR).

- Our Solution: We treat solutions as "subspaces" (overlapping circles). The "interconnected solutions" are the intersections of these subspaces.

- The Rotation: Instead of flipping a bit, we are rotating the entire state vector until it points into that intersection.

2.1 Adaptive Mesh Tesselation (AMT)

2.2 The Base Manifold ($M$): The Immutable Core

- The Manifold () is covered by an Atlas of Local Hilbert Spaces (the "Knowledge Foam"), where each chart represents a distinct domain of knowledge (e.g., Physics, Finance, Topology).

2.3 The Tangent Corpus ($TM$): The Dynamic Layer

- It is a linear space, allowing for rapid, low-cost calculation.

- New knowledge is always stored additively as a Tangent Vector ($\mathbf{v}$), preserving the integrity of the Base:

III. Learning Protocol: Dendritic Knowledge Crystallization

- Nucleation Event (The Surprise): An unexpected input is formalized as an Error Vector ($\mathbf{\delta}$) from the expected value. This vector represents a high-energy "surprise" and serves as the Nucleation Site on the Manifold, analogous to the biological site of Long-Term Potentiation (LTP).

- Additive Growth: The new learning does not rewrite the Manifold's coordinates; instead, it establishes a new Tangent Plane locally. The new knowledge is then integrated via linear approximation at that point:

- Crystallization: The learning rapidly propagates outward from the Nucleation Site in a structured manner, forming Dendritic Threads (a specialized network of connected Tangent Vectors), thus integrating the knowledge without corrupting the Base Manifold ($M$).

IV. Comparative Analysis & Cognitive Efficiency

4.1 Geometric Layering vs. Destructive Rewriting

The Riemannian Intelligence model eliminates this failure mode by changing the fundamental mathematical action:

| Feature | Classical Model (Backpropagation) | Riemannian Intelligence (Geometric) |

|---|---|---|

| Learning Action | Global Weight Rewriting | Local Vector Addition (Layering) |

| Stability | Highly Unstable (Prone to Forgetting) | Architecturally Stable (Base Manifold is Immutable) |

The stability of our model is mathematically proven by the separation of the Base and Tangent layers. Since the Base Manifold ($M$) is static, there is no pathway for new inputs to corrupt established knowledge.

4.2 Isomorphic Rotation (The Spinning Triangle)

We define the transfer of structural logic between two conceptual domains as an Orthogonal Transformation (Isometry). This operator, defined by the Rotation Matrix ($R$), changes the coordinates of a conceptual vector but preserves its internal geometric properties.

- The relationship (or "logic") between two conceptual vectors, and , is defined by their Dot Product ($\mathbf{u} \cdot \mathbf{v}$).

- By using an Orthogonal Matrix, we guarantee the preservation of this relationship across the entire Corpus:

This proof confirms that if the logic "High Risk = High Reward" holds in Finance (), the Isomorphically Rotated concepts will hold the identical relationship in Relationships (), enabling flawless, instant transfer (Geometric Priming).

4.3 Geodesic Search (The Principle of Least Action)

The AGI's optimization is guided by the Geodesic Equation:

V. Architectural Representation: The Layered Manifold

- Structure: This is your orthogonal matrix of central positions.

- Physics: It has high mass and high inertia. It is difficult to move.

- Role: It provides the coordinate system and the context. It does not react; it *is*.

- Structure: This is a dynamic field of vectors attached to the surface of Layer A.

- Physics: These vectors represent Rates of Change (Tangents). They are massless and highly reactive.

- Role: When new data arrives, it is encoded here. For example, if the AGI reads a new physics paper that contradicts Newton, it doesn't delete 'Newton' from Layer A. It adds a strong Tangent Vector in Layer B at the coordinate of 'Gravity,' pointing in the direction of 'Einstein.'

VI. The Mechanism: Reaction without Editing

VII. The Integration Event: The Exponential Map

VIII. Conclusion and Future Work: Towards Riemannian Intelligence

- 1. Nucleation (Surprise Detection): New stimuli are formalized as a precise Error Vector ($\mathbf{\delta}$) relative to the Base Manifold, initiating a localized Tangent Layer.

- 2. Isomorphic Error Search: The AGI performs a geodesic search across the entire Tangent Corpus, identifying other problems that share an identical geometric shape of the error (i.e., problems with the same underlying mathematical structure), irrespective of their content domain.

- 3. Transfer by Parallel Transport: The entire Solution Set () associated with the known, isomorphic problem is Parallel Transported along the geodesic to the new problem's domain. This instantly applies proven logic structures, achieving superior, near-instantaneous transfer learning.

- Computational Efficiency: By reducing problem-solving to finding the shortest path (the Geodesic) on the Manifold, the system adheres to the Principle of Least Action. This replaces computationally expensive global searches with efficient geometric calculations.

- Interpretability and Safety: The immutability of the Base Manifold ensures that core safety protocols and established truths cannot be overwritten. Furthermore, every decision is traceable to the specific Tangent Vector and Base Coordinate used, making the model inherently more interpretable than current black-box systems.

- Generalized Discovery: By matching problems based on the *shape of their uncertainty* rather than their superficial content, Riemannian Intelligence provides a mechanism for true analogical reasoning and scientific discovery, bridging knowledge gaps between disparate fields (e.g., fluid dynamics and economic turbulence).